Running Crate Data on CoreOS and Docker

Table of content

Recently I’ve been playing around with CoreOS which is a fairly new Linux distribution. Opposed to other new distributions that keep popping up CoreOS brings some interesting new ideas and does a whole lot different than conventional distributions.

It is very minimal and targeted towards cloud or clustered environments. With systemd, Docker and Btrfs as its core components with two interesting additions called Etcd and Fleet. Etcd is a distributed key-value store and fleet extends systemd and transforms it into a distributed init system.

The main idea is that almost everything runs in a docker container. So in order to run an application one would create a unit file for fleet/systemd which is then submitted to the cluster using fleetctl.

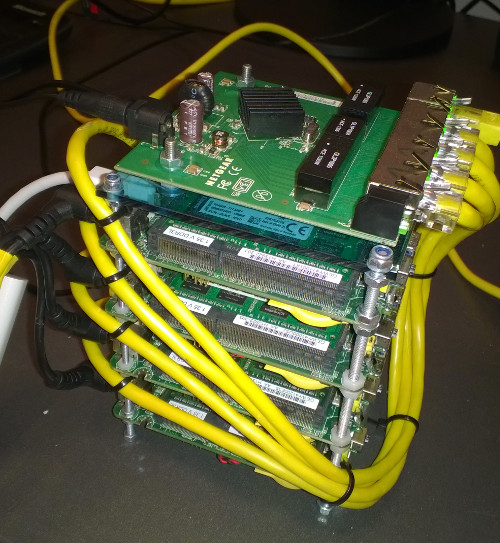

I tried to see how I could get Crate Data to run on a 4 node NUC/CoreOS cluster:

In case you don’t know about Crate Data. It is a fully distributed data store - which makes it a perfect fit for a Linux distribution that was built with clusters in mind.

If you want to follow through I assume you’ve got a CoreOS cluster already up and running. In case you don’t: Take a look at the CoreOS Installation Documentation

Fleet is controlled using the fleetctl commandline tool.

The cli can either be invoked from a CoreOS machine where it is

pre-installed or from your regular computer if you choose to install it

there.

In order to run crate I created a service file called

crate@.service on my machine with the following

content:

[Unit]

Description=crate

After=docker.service

Requires=docker.service

[Service]

TimeoutSec=180

ExecStartPre=/usr/bin/mkdir -p /data/crate

ExecStartPre=/usr/bin/docker pull crate/crate

ExecStart=/usr/bin/docker run \

--name %p-%i \

--publish 4200:4200 \

--publish 4300:4300 \

--volumne /data/crate:/data \

crate/crate \

/crate/bin/crate \

-Des.node.name=%p%i \

ExecStop=/usr/bin/docker stop %p-%i

ExecStop=/usr/bin/docker rm %p-%i

[X-Fleet]

X-Conflicts=%p@*.serviceAnd submitted it to the cluster using fleet:

fleetctl --tunnel=nuc4 submit crate@{1,2,3,4}.serviceI used the –tunnel flag because I used fleetctl from my machine and

had to connect to nuc4 (one of the machines in the cluster) in order to

reach fleet. Note that fleetctl currently doesn’t recognize any settings

made in .ssh/config and always uses the core

user.

Once submitted the services can be started with

fleetctl start:

fleetctl --tunnel=nuc4 start crate@{1,2,3,4}.service

Unit crate@3.service launched on 83c9971f.../192.168.0.71

Unit crate@4.service launched on bdbf9df0.../192.168.0.88

Unit crate@2.service launched on 1144ce23.../192.168.0.34

Unit crate@1.service launched on bde80062.../192.168.0.26Note that the decision on which nodes of the cluster the services will run is managed by fleet.

fleetctl list-units

UNIT MACHINE ACTIVE SUB

crate@1.service 83c9971f.../192.168.0.71 active running

crate@2.service bdbf9df0.../192.168.0.88 active running

crate@3.service bde80062.../192.168.0.26 active running

crate@4.service 1144ce23.../192.168.0.34 active runningOnce a crate service is started a folder named

/data/crate will be created on each CoreOS node. In

addition, the crate docker image will be pulled and once that is

finished the container will be launched.

The TimeoutSec setting is important because pulling a

docker image for the first time takes a bit and that might cause the

serivce to time out.

In case you’re familiar with systemd service file you’ll notice that

the crate service file looks pretty much like a regular systemd service

file. The only difference is the [X-Fleet] addition that

ensures that only one service named crate@*.service is

assigned per node.

Unfortunately at this point I figured out that multicast isn’t working if the docker containers run on different hosts.

There is a github issue in the docker repository about that but so far without solution.

So we’ve to change our service file to make use of unicast. In order to use unicast we need a list of all the hosts running crate. The problem is that we don’t really know which hosts that will be since the assignment is managed by fleet.

So to work around that we can create some kind of service discovery.

Create a file called crate-discovery@.service with the

following contents:

[Unit]

Description=Crate discovery service

BindsTo=crate@%i.service

[Service]

ExecStart=/bin/bash -c "\

while true; do \

etcdctl set /services/crate/%H '{\"http\": 4200, \"transport\": 4300 }' --ttl 60; \

sleep 45; \

done;"

ExecStop=/usr/bin/etcdctl rm /services/crate/%H

[X-Fleet]

X-ConditionMachineOf=crate@%i.serviceThe whole thing is explained in the CoreOS documentation as Sidekick.

It basically runs on the same nodes where the crate service is also running and announces to etcd that it is alive.

After we’ve created the crate-discovery service we can again submit and start it using fleetctl.

fleetctl --tunnel=nuc4 submit crate-discovery@{1,2,3,4}.service

fleetctl --tunnel=nuc4 start crate-discovery@{1,2,3,4}.serviceThe previously created crate@.service has to be adopted

a little bit to make use of the information provided by the discovery

service. Lets modify the ExecStart= section:

ExecStart=/bin/bash -c '\

HOSTS=$(etcdctl ls /services/crate \

| sed "s/\/services\/crate\///g" \

| sed "s/$/:4300/" \

| paste -s -d","); \

/usr/bin/docker run \

--name %p-%i \

--publish 4200:4200 \

--publish 4300:4300 \

--volume /data/crate:/data \

crate/crate \

/crate/bin/crate \

-Des.node.name=%p%i \

-Des.multicast.enabled=false \

-Des.network.publish_host=%H \

-Des.discovery.zen.ping.unicast.hosts=$HOSTS'We now use etcdctl to get a list of all hosts that have announced themselves to etcd. Using that list we start the crate container and specify the unicast.hosts as argument to the crate executable.

In order to re-deploy the changed file the destroy

subcommand of fleetctl can be used:

fleetctl --tunnel=nuc4 destroy crate@{1,2,3,4}.service

fleetctl --tunnel=nuc4 submit crate@{1,2,3,4}.service

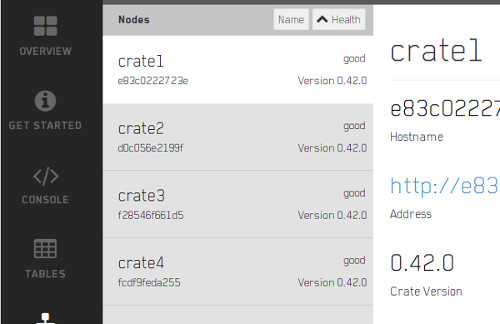

fleetctl --tunnel=nuc4 start crate@{1,2,3,4}.serviceAnd finally we should see the crate cluster up and running:

In case you want to try this yourself here are both service files as gist:

Update ¶

The setup I’ve described here has been extended a bit and is now actually in use to power play.crate.io. An online playground for Crate. So if you want to try a Crate cluster that is running on CoreOS you should check it out.